Client Error Guide

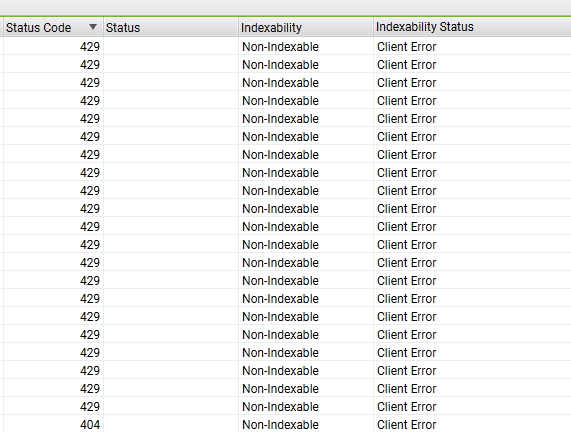

Sometimes while trying to crawl websites, Screaming Frog can encounter “Status 429” Client error which looks like this –

In this guide, we will learn about

- What does Error code 429 mean in general as well as in context of Screaming Frog

- Ways to resolve this error including response from Screaming Frog Team

Status 429 Meaning as per Chrome & Mozilla Web Docs

As per Mozilla & Chrome developer guide, status code 429 mean “Too Many requests”

Here’s the quote from Mozilla Website –

The HTTP 429 Too Many Requests response status code indicates the user has sent too many requests in a given amount of time (“rate limiting”).

While using Screaming Frog, this usually happens when Screaming Frog Crawler is blocked by the web/IT server for sending automated crawl requests.

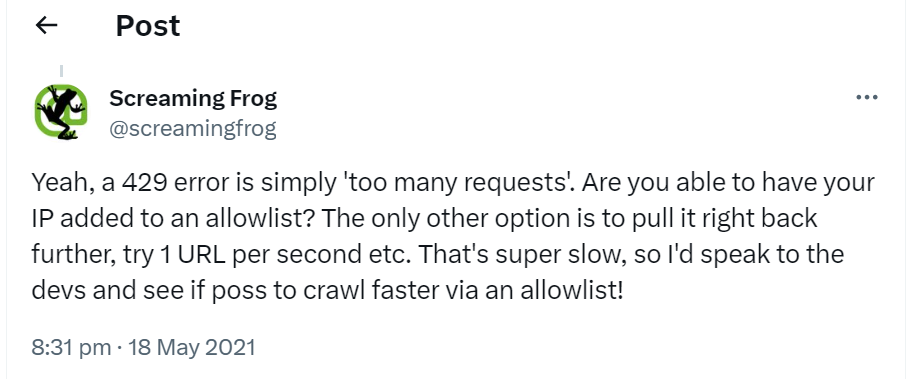

It can be frustrating since you likely won’t be able to couldn’t generate recommendations for the affected pages. In fact, in one of the previous X (Twitter) posts, Screaming Frog team confirmed this means “too many requests” to the server.

Ways to resolve 429 Error in Screaming Frog

There are mainly 3 trail and error methods that can be used to fix this –

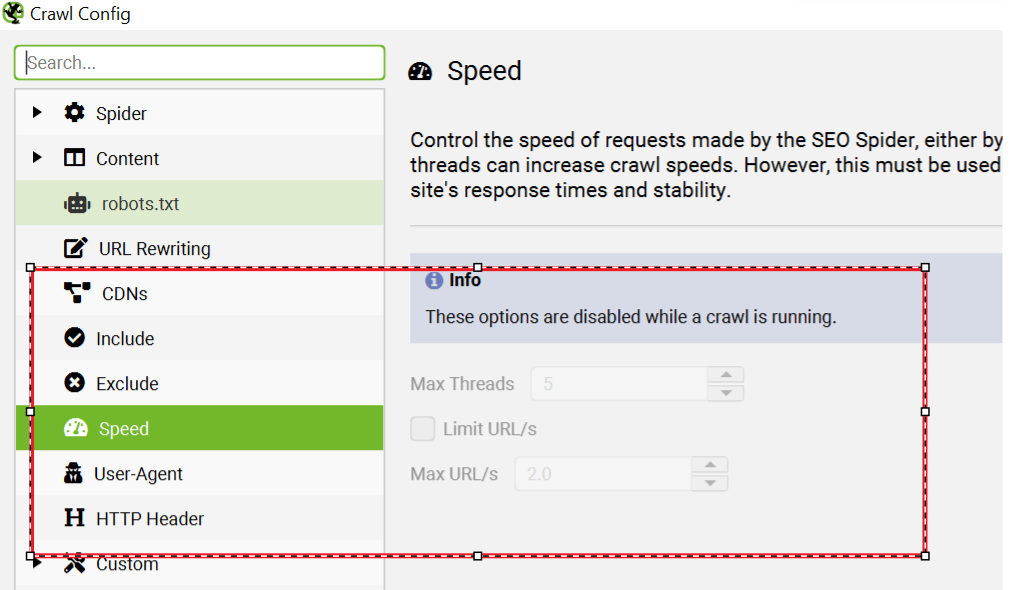

Solution 1) Since the website is blocking Screaming Frog crawler due to “too many requests in short amount of time”, sometime lowering speed can help with a Status 429

Here’s how to do it in Screaming Frog –

Go to Configuration>Speed

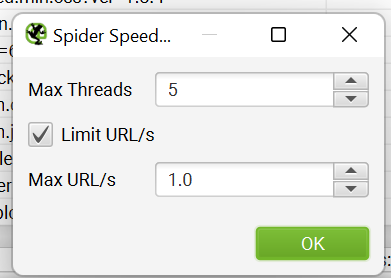

When you want to slow down the speed of a crawl process, it’s simpler to use the “Max URI/s” option.

This option sets the maximum number of URL requests per second. For instance, if you set it to 1, it means the process will only crawl one URL per second, as shown in the screenshot below.

Since the website server is blocking Screaming Frog, Error 429 might continue to occur.

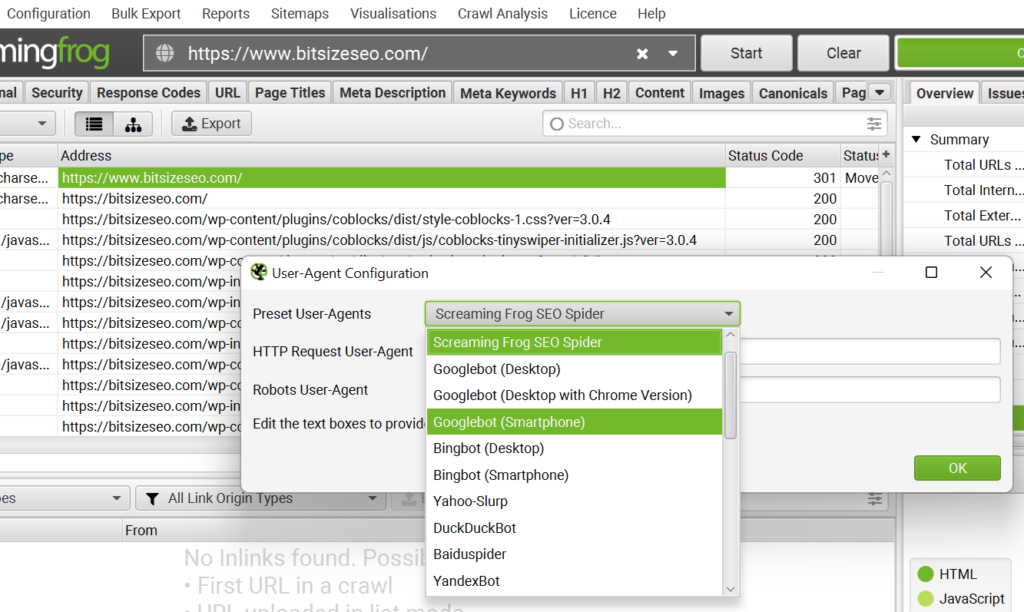

Solution 2) Change the User Agent Under Configuration>User Agent>Preset User Agents to Googlebot (any Googebot option is okay)

In Screaming Frog, the “preset user agents” refer to predefined settings for user agents that the software uses when crawling a website.

User agents are pieces of software, like web browsers or web crawlers, that access web pages.

Each user agent might be identified differently to websites, and some websites might serve different content or behave differently based on the user agent.

Screaming Frog allows you to select different user agents or specify custom ones to simulate how a website appears or responds to various types of visitors. These preset user agents in Screaming Frog are predefined settings representing common user agents (like Googlebot, Bingbot, or mobile browsers) that you can select to imitate the behavior of these specific agents during the website crawl.

This feature helps in understanding how the website might be perceived or interacted with by different types of software accessing it. This way you are changing crawl user agent from Screaming Frog Spider to Googlebot. In many cases, this solution might not work but give it a try.

Last & final Solution 3) Change the User Agent Under Configuration>User Agent>Preset User Agents to Googlebot (any Googebot option is okay)

based on Solution 1 & 2, we can safely conclude that website is blocking the Spider.

In that case, then working with the site owners to set up an exception to not block Screaming Frog Spider is the best option.

You can request client server to Whitelist your IP & start crawling.

Additional Resources

Screaming Frog Help Article on Error 429

Mozilla Web Docs on Error 429