Screaming Frog Tips – Beginner to Advanced Level

The name “Screaming Frog” itself carries an intriguing story, inspired by a resilient frog that refused to back down when confronted by two cats in Dan’s own backyard. 😛

Okay, we will not digress from the main topic and let’s jump straight in.

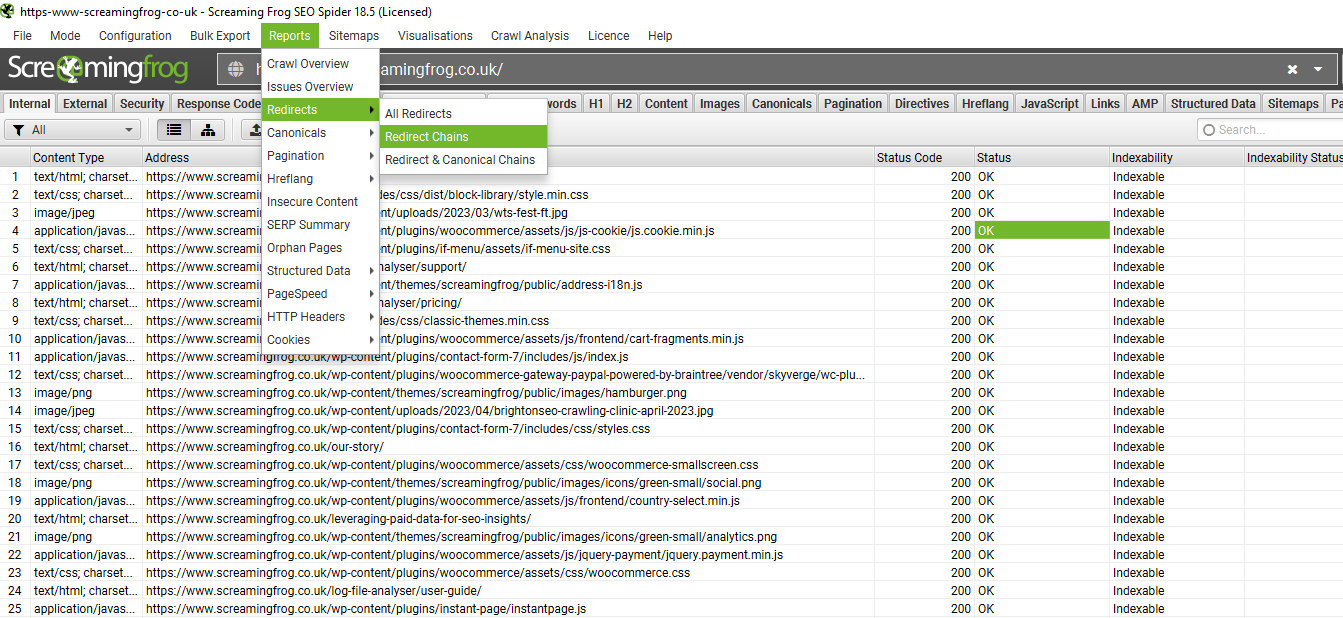

Tip 1 – How to check redirect chains in Screaming Frog

Path – Click ‘Reports > Redirects > Redirect Chains’ To View Chains & Loops.

Steps in Detail

- Launch Screaming Frog SEO Spider and enter the website URL you want to crawl in the “Enter URL to spider” field.

- Click on the “Start” button to initiate the crawl process.

- Once the crawl is complete, navigate to the “Redirect Chains” report. You can find this report under the “Reports” tab in the top menu.

- In the “Redirect Chains” report, you will see a list of URLs that are part of redirect chains on your website.

- Analyze the URLs listed to identify any redirect loops or excessive redirect chains that might be impacting website performance or causing indexing issues.

- Pay attention to the status codes and target URLs in the report, as they provide insights into the specific redirects and their destinations.

- Use this information to investigate and address any problematic redirect chains. Consider implementing appropriate redirects, removing unnecessary redirects, or optimizing redirect paths to improve website speed and user experience.

Similarly, How to check there aren’t any long or multiple redirect chains.

Look for URLs that have a high number of redirect hops or multiple entries indicating redirection.

Examine the target URLs within the redirect chains to ensure they are reaching the desired final destination and not unnecessarily going through multiple redirects.

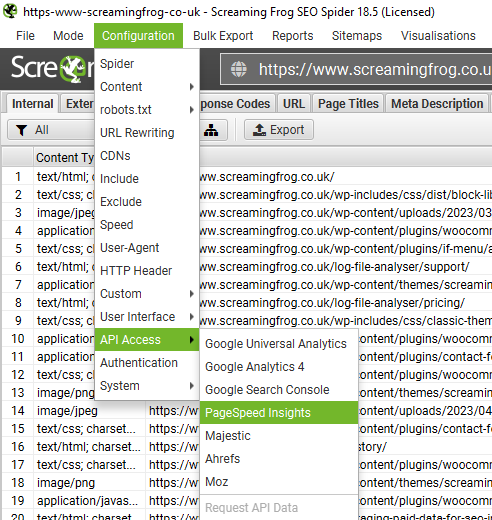

Tip 2- How to connect Screaming Frog to Page Speed Insights

Path -Go to Configuration on the top left Menu >API Access>Page Speed Insights

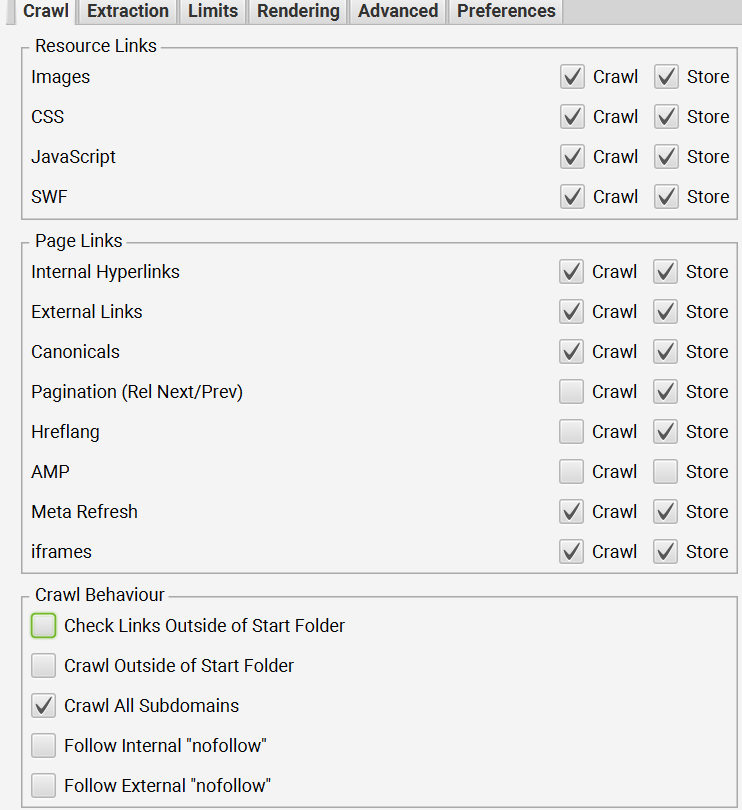

Tip 3 – How to Crawl only a Folder or Subfolder/Section of the website

For example, let’s say you are looking to crawl pages only under /seo-spider/ subfolder on https://www.screamingfrog.co.uk/seo-spider/

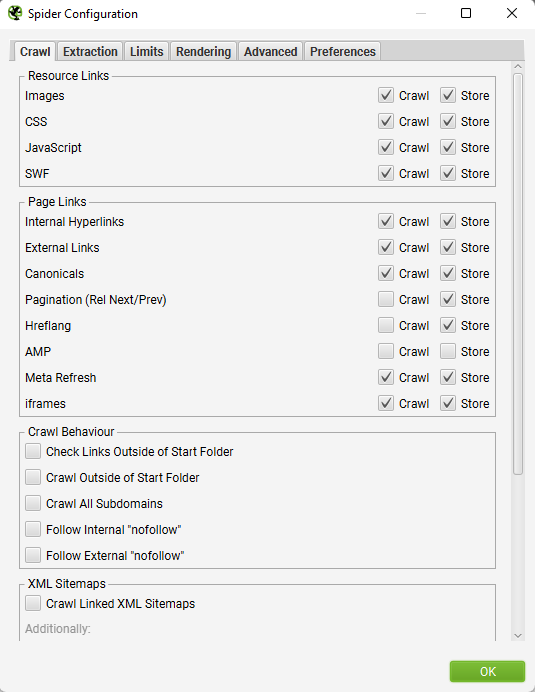

Path : Go to Configuration>Spider, Untick “Crawl Outside Of Start Folder”

As you can see, in above crawl you will find all pages under /seo-spider folder

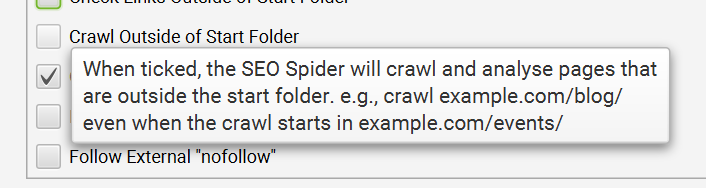

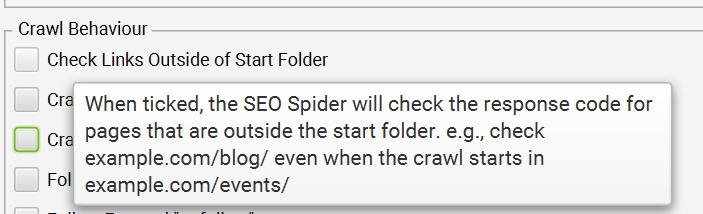

It is different from the other field (image below) which is “Check Links Outside of Start Folder” which when ticked checks & reports the response code (Status 200, Status 4XX etc.) for the pages that are outside of the start folder.

Tip 4 – How to enable subdomain crawling in screaming frog

For example, you are looking to idenitify subdomains from development or staging servers

Path – Navigate to Configuration > Spider, and ensure that “Crawl all Subdomains” is selected

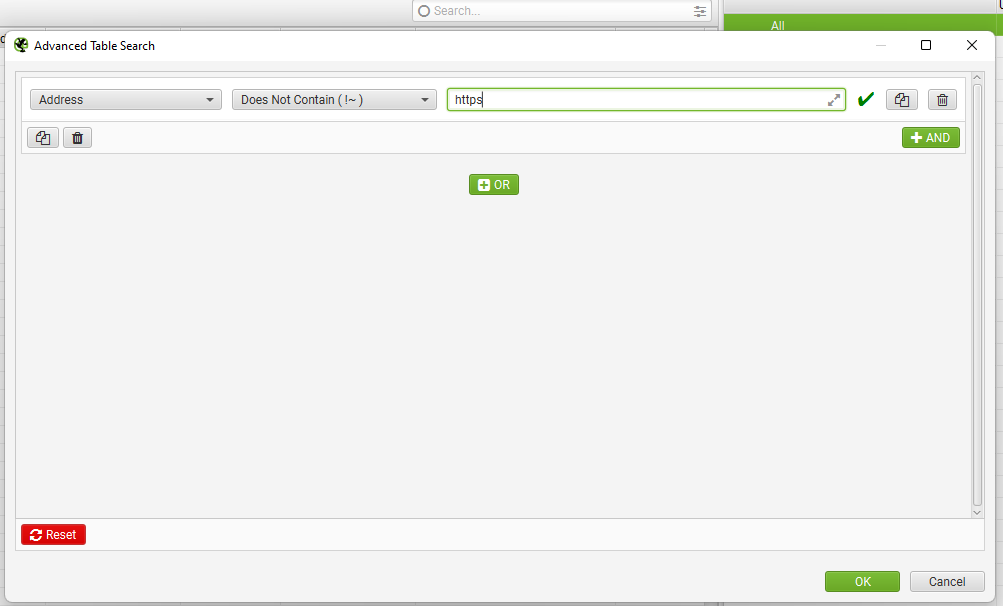

Tip 5 – How to identify NON SSL (unsecure) Pages in Screaming Frog

Path

- In the “Internal” tab, click on the “Filter” drop-down menu and select “Custom.”

- In the custom filter dialog box, enter the following parameters:

- Field: Protocol

- Condition: Does Not Equal (or Not Contains)

- Value: https://

- Click on the “OK” button to apply the filter.

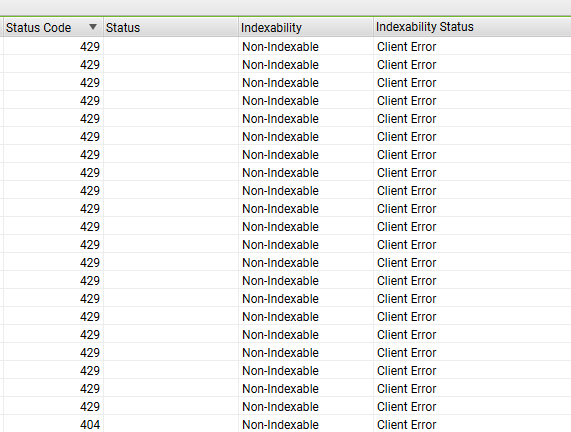

Tip 6- How to solve if you are not able to crawl any page in Screaming Frog due to 429 Error Code. Full & Detailed guide is available on the new blog post about Screaming Frog 429 Error Guide

Sometimes, you might see error codes like Status Code 429 which means “Too Many Requests” response status.

This usually happens when Screaming Frog Crawler is blocked by the web/IT server for sending automated crawl requests.

For Status 429 pages issues in Screaming Frog, you can try the following options –

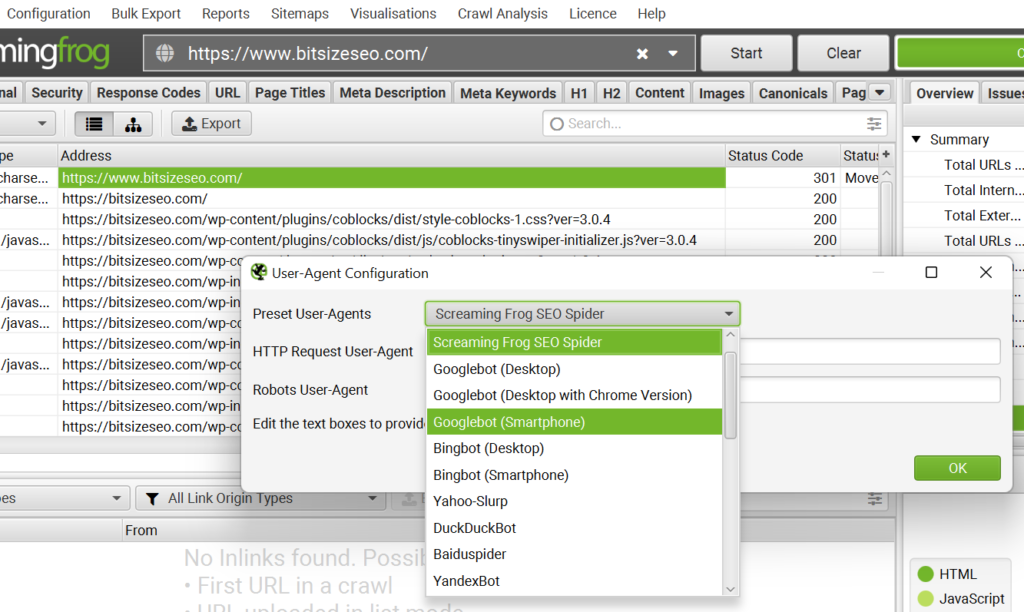

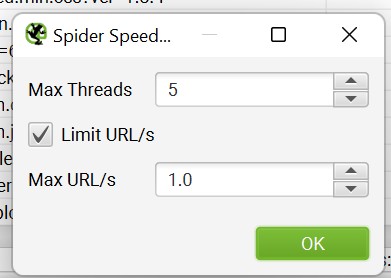

Option 1) Change the User Agent Under Configuration>User Agent>Preset User Agents to Googlebot (any Googebot option is okay)

This way you are changing crawl user agent from Screaming Frog Spider to Googlebot.

In many cases, this solution might not work but give it a try.

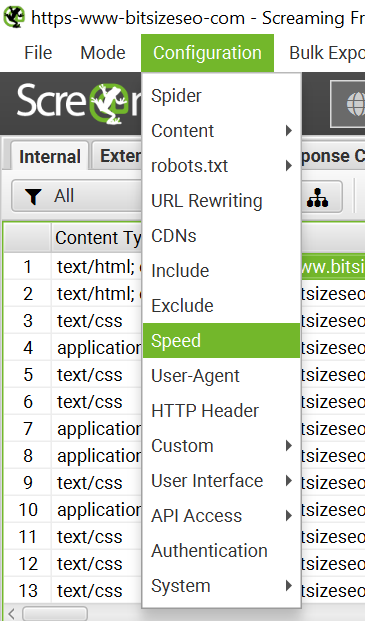

Solution 2) Sometime lowering crawl rate/ crawl speed can help with a Error 429

Path – Configuration>Speed

When you want to slow down the speed of a crawl process, it’s simpler to use the “Max URI/s” option.

This option sets the maximum number of URL requests per second. For instance, if you set it to 1, it means the process will only crawl one URL per second, as shown in the screenshot below.

Sometimes it might not work as well!

Solution 3) Based on Solution 1 & 2, we can safely conclude that website is blocking the Spider.

In that case, then working with the site owners to set up an exception to not block Screaming Frog Spider is the best option. You can request to Whitelist an IP & see if this works.

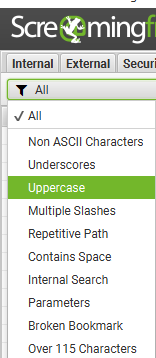

Tip 7 – How to find CAPITAL LETTERS (Upper Case) URL’s in Screaming Frog

Path > Just right below the Internal Tab>Use the filter for “Uppercase”

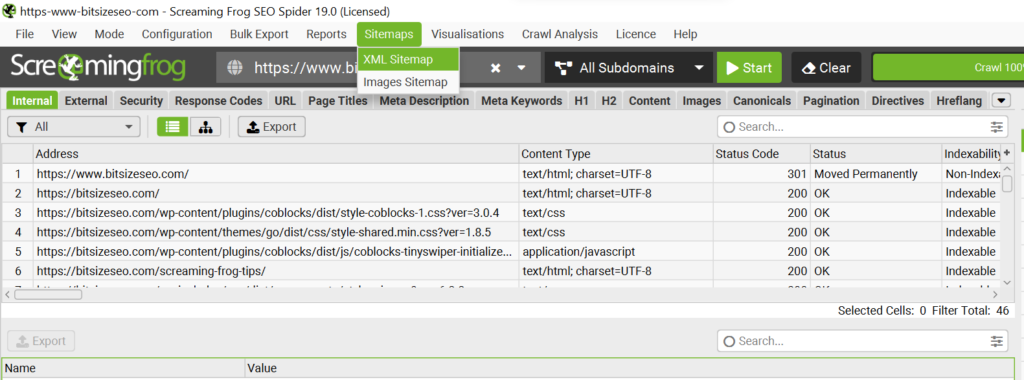

Tip 8 – How to create XML Sitemap using Screaming Frog

Path – In Top Navigation Click Sitemap > XML Sitemap

You can export XML sitemap based on your preferences.